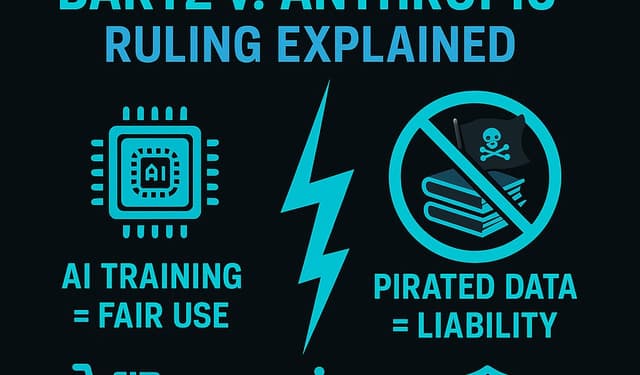

Judge William Alsup just handed down what I believe will be one of THE landmark AI decisions we'll see this decade. The federal court's split ruling in Bartz v. Anthropic does something remarkable: it validates AI training as fair use while simultaneously condemning the piracy that often enables it. This isn't just a win or loss for Anthropic—it's a blueprint for how courts will likely approach the dozens of AI copyright cases working their way through the system.

Yes, training Claude on millions of books constitutes fair use. No, downloading those same books from pirate sites doesn't get a free pass. The distinction matters because it fundamentally reshapes how AI companies must think about data acquisition.

The judge's reasoning on fair use particularly struck me. He describes AI training as "quintessentially transformative," comparing it to how human writers learn from reading. "Everyone reads texts, too, then writes new texts," Alsup writes. "To make anyone pay specifically for the use of a book each time they read it, each time they recall it from memory, each time they later draw upon it when writing new things in new ways would be unthinkable."

This analogy—AI as reader learning to write—provides the conceptual foundation that I think AI companies have been desperately seeking. It's not about copying; it's about learning patterns, understanding language, and creating something fundamentally new.

Here's where I think Anthropic's story gets really interesting. After building their initial models on pirated content from Books3, Library Genesis, and other dubious sources, the company made a dramatic shift in 2024. They hired Tom Turvey, the former head of Google's book-scanning project, with a mandate to obtain "all the books in the world" through legitimate means.

Anthropic then spent millions of dollars purchasing physical books—many second-hand—which they proceeded to slice from their bindings and scan into digital format. The physical books were destroyed in the process, but the digital copies were ruled as legitimate fair use. This expensive pivot from piracy to purchase reveals something I've been saying for a while: AI companies can afford to do this right. They're choosing not to.

Consider what Anthropic's spending reveals: they paid millions for books, often buying used copies at market rates. This money flows back into the book ecosystem—to retailers, distributors, and ultimately supporting the market for authors' works. If every AI company followed this model instead of scraping pirate sites, we'd see a substantial new revenue stream for the publishing industry

The court stressed that it matters whether AI systems "directly compete with the originals." Since Claude doesn't spit out verbatim passages from novels, the technology complements rather than replaces human authorship.

I see this as establishing a sustainable equilibrium: AI companies must pay for access to training materials, supporting the creative economy, while authors benefit from AI tools that help readers discover and engage with human-written works.

With damages still to be determined, I calculate Anthropic faces potential liability in the billions. Statutory damages for willful infringement can reach $150,000 per work, and we're talking about millions of books. This creates a powerful deterrent effect: train responsibly or face existential financial risk.

while Alsup's ruling gives us crucial clarity, we're still in the early innings of this game. This is just one federal district court's take, and I've learned to be cautious about declaring victory based on a single decision.

I expect we'll see Anthropic appeal to the Ninth Circuit, which could modify or even reverse parts of Alsup's reasoning. And honestly? I think we'll eventually need either the Supreme Court to step in and create nationwide clarity, or Congress to pass actual AI legislation. Neither seems likely in the near term, which means we're in for years of case-by-case battles as the law slowly catches up to the technology.